Cars are complex machines, blending electronics and mechanics that whizz down the highway at speeds of 60 miles per hour or more. As drivers, we don’t necessarily want to think about it, but the fact remains that there’s a lot that could go wrong. And this is with our hands on the wheel, eyes on the road, and feet on the pedals. Introduce the concept of autonomous vehicles controlled by self-driving car software running AI algorithms fed by a network of sensors plus other data, and everything gets more complicated still.

Safety first

Fortunately, folks are thinking about exactly these kinds of problems. The automotive sector has long followed a trend of introducing more and more safety systems into vehicles. Most recently, this includes automated emergency braking, a requirement for new cars and vans in Europe since July 2022. Plus, there are further enhancements in the pipeline – for example, to make vehicles more aware of pedestrians and cyclists; systems for warning of driver drowsiness and attention loss; extra energy-absorbing impact zones; and direct vision for trucks (so that lorry drivers have a better view).

Electronic control units (ECUs) – the onboard computing resources responsible for engine management, brake control, battery management, door control and various advanced driver-assistance systems – undergo a battery of tests before designs end up on the road. Firms such as National Instruments, and others, provide ECU test systems that allow car makers and their suppliers to step through a range of tests, including fault injection in software and other scenarios designed to filter out the good from the bad.

So far, so good, but autonomous systems, which include self-driving vehicles, ramp up the challenges of product assurance to a whole new level given the vast range of inputs that must be considered. So-called modified condition/decision coverage (MCDC) strategies – test cases that involve examining how each of the inputs independently affects performance – balloon in terms of demands on time and money. NASA estimated that MCDC testing could eat up a whopping 85%, or more, of software development budgets. And the hurdles don’t stop there.

Black-box puzzle

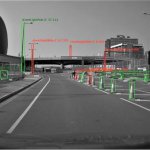

Self-driving control schemes that are built around artificial intelligence approaches such as deep learning – which makes use of layers of statistically weighted connections in a mathematical model that crudely resembles a network of neurons in the brain – are to all extent and purposes a ‘black box’. We know that the algorithms work, because we can see the results, but we can’t peer inside in the same way that developers are able to step through lines of code. Also, the connections evolve over time as the system gathers more data and adapts to changing conditions, which adds to the puzzle.

In fact, as experts at US National Institute of Standards and Technology (NIST) point out, the testing of autonomous systems remains an unsolved problem. Today, the metrics that are used for self-driving vehicles are quantities such as ‘the number of miles driven’. But this testing has no guarantee of uncovering rare events such as a car hitting a white trailer, which has little contrast against a bright sky, and is positioned unusually across the road (as was the case for a 2016 incident involving a Tesla Model S and Freightliner Cascadia truck pulling a refrigerated semitrailer).

The clue (in terms of coming up with a solution for testing self-driving car software) can be found in the results of a series of studies that NIST ran from 1999 to 2004, which showed that virtually all software bugs and failures involved between 1 and 6 factors. Jump forward almost twenty years and NIST’s finding could turn out to be incredibly useful in performing software assurance on self-driving vehicles, which wasn’t an issue that was on the radar at the time.

Combinatorial nugget

Pairwise, or 2-way, testing has long been popular as a way of routing out software bugs, but the research showed that such methods weren’t a complete strategy as ‘many failures are revealed when more than two conditions were true’. Typically, according to NIST, it took a combination of three factors to discover 30% of faults that existed. But, by the time workers had tested to six conditions, the proportion of faults had got very close to 100% – or ‘pseudo’ exhaustive. The NIST team notes that while it’s nearly always impossible to do exhaustive testing – in reality, you may not have to. Put simply, you just need to test all of the combinations that trigger faults.

In simulations, results gathered by engineers at IBM suggest that there’s mileage in rule-based searching of collision test cases. And examples of combinatorial approaches can be found in other sectors too, such as industrial controls, aerospace, and cybersecurity. Usefully, NIST’s Information Technology Lab has a number of downloadable tools that it has made available for users wanting to carry out combinatorial testing. These include its Advanced Combinatorial Testing System, dubbed ACTS, which generates test sets that ensure t-way coverage of input parameter values.