Will OpenAI join other tech giants in making its own AI chips?

- OpenAI plans to work on its AI chips to avoid a costly reliance on Nvidia.

- It’s been rumored to be considering an acquisition to speed the process.

- Either way, bringing a custom chip to market could take years.

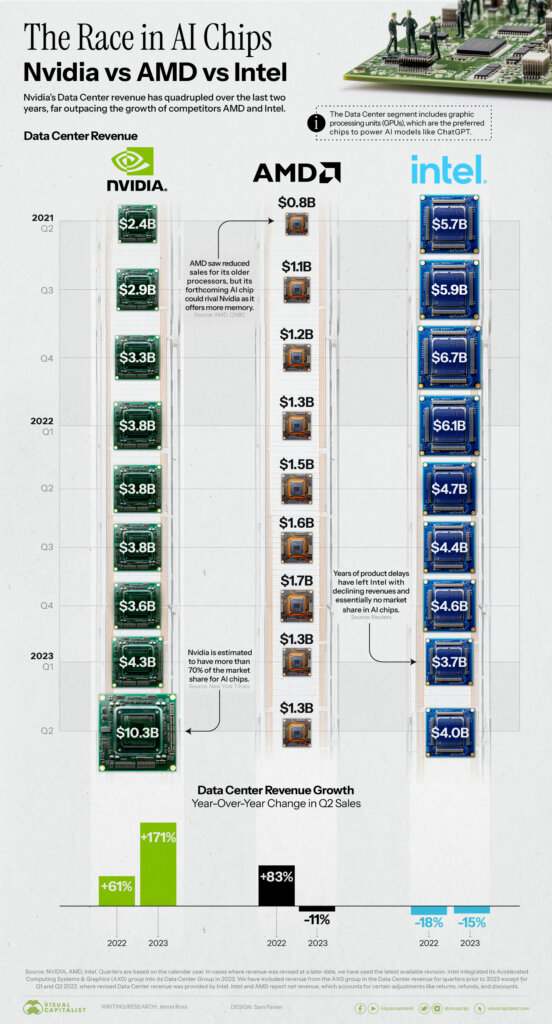

The AI chips market has emerged as a pivotal force in the technology industry, driven by the increasing demand for AI solutions across various applications. These specialized chips, designed specifically for AI workloads, have become the cornerstone of AI innovation. Companies like Nvidia, Intel, and AMD compete fiercely to produce cutting-edge AI chips that offer unmatched computational power, energy efficiency, and scalability.

Nvidia has specifically played a pioneering role in the AI chips market, establishing itself as a leading provider of high-performance Graphics Processing Units (GPUs) tailored for AI and machine learning (ML) workloads. The H100, announced last year, is Nvidia’s latest flagship AI chip, succeeding the A100, a roughly US$10,000 chip called the “workhorse” for AI applications. Developers today primarily use the H100 to build so-called large language models (LLMs), which are at the heart of AI applications like OpenAI’s ChatGPT.

Running those systems is expensive and requires powerful computers to churn through terabytes of data for days or weeks. They also rely on hefty computing power to generate text, images, or predictions for the AI model. Training AI models, especially large ones like GPT, requires hundreds of high-end Nvidia Graphics Processing Units (GPUs) working together.

The thing is, most of the world’s AI chips are being produced by Nvidia. Especially since OpenAI’s ChatGPT has become a global phenomenon, the California-based chipmaker has become a one-stop shop for AI development, from chips to software to other services. The world’s insatiable hunger for more processing power has even pushed Nvidia to become a US$1 trillion company this year.

Nvidia vs. AMD vs. Intel: Comparing AI Chip Sales. Source: Visual capitalist

While tech giants like Google, Amazon, Meta, IBM, and others have also produced AI chips, Nvidia accounts for more than 70% of AI chip sales today. It holds an even more prominent position in training generative AI models, according to the research firm Omdia. For context, OpenAI’s ChatGPT fuses together a Microsoft supercomputer that uses 10,000 Nvidia GPUs.

But from OpenAI’s point of view, while Nvidia is necessary for the company’s current operations, the dependency may need to be revised in the long term. Because if, as Bernstein analyst Stacy Rasgon says, each ChatGPT query costs the company around 4 cents, the amount will only continue to grow alongside the usage of ChatGPT.

The report by Reuters in whcih Rasgon is quoted also said that if OpenAI increased its query volume to 1/10th of Google’s over time, it would require US$48 billion in GPUs to scale to that level.

Beyond that, it must spend US$16 billion annually to keep up with demand. This is an existential problem for the company – and the industry at large. With that in mind, the company has been considering working on its own AI chips to avoid a costly reliance on Nvidia.

Even CEO Sam Altman has indicated that the effort to get more chips is tied to two major concerns: a shortage of the advanced processors that power OpenAI’s software and the “eye-watering” costs associated with running the hardware necessary to power its efforts and products.

OpenAI’s plans on AI chips: To make or not to make?

According to recent internal discussions described to Reuters, the company has been actively discussing this matter but has yet to decide on the next step. The discussion has so far centered on options to solve the shortage of expensive AI chips that OpenAI relies on.

Will OpenAI create its own AI chips? If so, how, and by when?

Simultaneously, there have been rumos that Microsoft is also looking in-house and has accelerated its work on ‘Codename Athena,’ a project to build its own AI chips.

While it’s unclear if OpenAI is on the same project, Microsoft reportedly plans to make its AI chips available more broadly within the company as early as this year.

A report by The Verge indicated that Microsoft may also have a roadmap for the chips that includes multiple future generations. A separate report suggested that the chip reveal will likely occur at Microsoft’s Ignite conference in Seattle in November. Athena is expected to compete with Nvidia’s flagship H100 GPU for AI acceleration in data centers if that comes through.

“The custom silicon has been secretly tested by small groups at Microsoft and partner OpenAI,” according to Maginative’s report. However, if OpenAI were to move ahead to build a custom chip independent of Microsoft, it would include a heavy investment that could amount to hundreds of millions of dollars a year in costs – with no guarantee of success.

What about an acquisition?

While the company has been exploring making its own AI chips since late last year, sources claim that the ChatGPTmaker has evaluated a potential acquisition target. Undoubtedly, the acquisition of a chip company could speed the process of building OpenAI’s chip – as it did for Amazon.com and its acquisition of Annapurna Labs in 2015.

“OpenAI had considered the path to the point where it performed due diligence on a potential acquisition target, according to one of the people familiar with its plans,” Reuters stated. Even if OpenAI has plans for a custom chip – including an acquisition – the effort will likely take several years.

In short, whatever the path may be, OpenAI will still be highly dependent on Nvidia for a while.