Exploring the groundbreaking EU AI Act

- Transparency requirements have been introduced by the EU in the AI Act for developers of general-purpose AI systems like ChatGPT.

- It also bans unethical practices, such as indiscriminately scraping images from the internet for facial recognition databases.

- Fines can range up to €35 million or 7% of global turnover, with the severity determined by the nature of the infringement and the company’s size.

In a significant stride toward regulating the rapidly evolving field of AI, the European Union has recently achieved a milestone with the approval of the EU AI Act. This landmark legislation marks a defining moment for the region, setting the stage for comprehensive guidelines governing the development, deployment, and use of AI technologies.

It all started in April 2021, when the European Commission proposed an AI Act to establish harmonized technology rules across the EU. At that time, the draft law might have seemed fitting for the existing state of AI technology, but it took over two years for the European Parliament to approve the regulation. In those 24 months, the landscape of AI development has been far from idle. What the bloc did not see coming was the release and proliferation of OpenAI’s ChatGPT, showcasing the capability of generative AI–a subset of AI that was foreign to most of us.

As more and more generative AI models entered the market following the dizzying success of ChatGPT, the initial draft of the AI Act took shape. Caught off guard by the explosive growth of these AI systems, European lawmakers faced the urgent task of determining how to regulate them under the proposed legislation. But the European Union is always ahead of the curve, especially when regulating the tech world.

So, following a long and arduous period of amendment and negotiation, the European Parliament and the bloc’s 27 member countries finally overcame significant differences on controversial points, including generative AI and police use of face recognition surveillance, to sign a tentative political agreement for the AI Act last week.

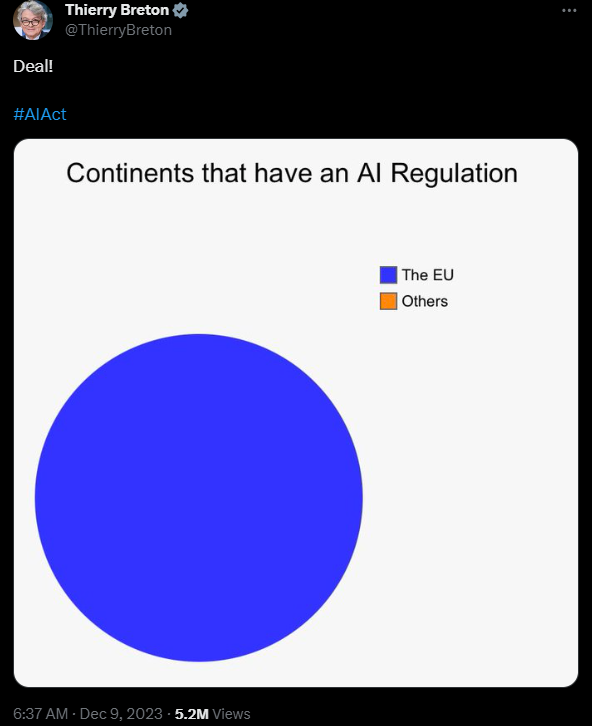

“Deal!” tweeted European Commissioner Thierry Breton just before midnight. “The EU becomes the first continent to set clear rules for using AI.” This outcome followed extensive closed-door negotiations between the European Commission, European Council, and European Parliament throughout the week, ending after three days of rigorous negotiations spanning thirty-six hours.

Yes, but how good is it? Source: Thierry Breton on X

“Parliament and Council negotiators reached a provisional agreement on the AI Act on Friday. This regulation aims to ensure that fundamental rights, democracy, the rule of law, and environmental sustainability are protected from high-risk AI while boosting innovation and making Europe a leader. The rules establish obligations for AI based on its potential risks and level of impact,” the European Parliament said.

The AI Act was initially crafted to address the risks associated with specific AI functions, categorized by their risk level from low to unacceptable. However, legislators advocated for its extension to include foundation models—the advanced systems that form the backbone of general-purpose AI services, such as ChatGPT and Google’s Bard chatbot.

Dragoș Tudorache, a member of the European Parliament who has spent four years drafting AI legislation, said the AI Act sets rules for large, powerful AI models, ensuring they do not present systemic risks to the Union and offers strong safeguards for citizens and democracies against any abuses of technology by public authorities.

“It protects our SMEs, strengthens our capacity to innovate and lead in AI, and protects vulnerable sectors of our economy. The EU has made impressive contributions to the world; the AI Act is another one that will significantly impact our digital future,” he added.

What makes up the EU AI Act?

While the EU AI Act is notable as the first of its kind, its comprehensive details remain undisclosed. A public version of the AI Act is not expected for several weeks, making it challenging to provide a definitive assessment of its scope and implications unless leaked.

Members of the European Parliament take part in a voting session during a plenary session at the European Parliament in Strasbourg, eastern France, on November 22 , 2023. (Photo by FREDERICK FLORIN / AFP).

Policymakers in the EU embraced a “risk-based approach” to the AI Act, focusing intense oversight on specific applications. For instance, companies developing AI tools with high potential for harm, especially in areas like hiring and education, must furnish regulators with risk assessments, data used for training, and assurances against damage, including avoiding perpetuating racial biases.

The creation and deployment of such systems would require human oversight. Additionally, specific practices, like indiscriminate image scraping for facial recognition databases, would be banned outright. But, as stated by EU officials and earlier versions of the law, chatbots and software producing manipulated images, including “deepfakes,” must explicitly disclose their AI origin.

Law enforcement and governments ‘ use of facial recognition software would be limited, with specific safety and national security exemptions. The AI Act also prohibits biometric scanning that categorizes people by sensitive characteristics, such as political or religious beliefs, sexual orientation, or race. “Officials said this was one of the most difficult and sensitive issues in the talks,” a report by Bloomberg reads.

While the Parliament advocated for a complete ban last spring, EU countries lobbied for national security and law enforcement exceptions. Ultimately, the parties reached a compromise, agreeing to restrict the use of the technology in public spaces but implementing additional safeguards.

The suggested legislation entails financial penalties for companies breaching the rules, with fines ranging up to €35 million or 7% of global turnover. The severity of the penalty would be contingent on the nature of the violation and the size of the company. While civil servants will finalize some specifics in the coming weeks, negotiators have broadly agreed on introducing regulations for generative AI.

Some 85% of the technical wording in the bill has already been agreed on, according to Carme Artigas, AI and Digitalization Minister for Spain (which currently holds the rotating EU presidency).

So far, EU lawmakers have been determined to reach an agreement on the AI Act this year, in part to drive home the message that the EU leads on AI regulation, especially after the US unveiled an executive order on AI and the UK hosted the international AI Safety Summit—China also developed its own AI principles.

However, next year’s European elections in June are also quickly closing the window of opportunity to finalize the Act under this Parliament. Despite these challenges, the EU’s success in finalizing the first comprehensive regulatory framework on AI is impressive.