Google robots? We’ll stick with Roomba, thanks

- Google robots will have a set of advances to make them more human.

- A “robot constitution” will apparently ensure the safety of robots in the home.

- Because the history of science-fiction has told us that such constitutions never go wrong. Ever.

Acting on the assumption that AI-powered robots will one day be indispensable to our domestic lives, Google DeepMind has introduced a set of advances that aim to ensure the technology acts as humanly as possible.

A future where AI-powered robots write our shopping lists, fold our laundry and ensure the coffee’s always hot will only be possible with well-behaved robots.

For humans, chores are straightforward, mind-numbing tasks.

Robots, however, need an embedded “high-level understanding of the world” to perform them. Aiming to arm Google robots with that understanding, one of DeepMind’s new advances is called AutoRT. It’s a “system that harnesses the potential of large foundational models” to make robots more clever.

Creativity and the science of robotics

These models collect experimental training data that allows the robots to learn about their environment on the job. They include the kinds of LLMs that power star child AI ChatGPT or Google’s Gemini.

LLM usage in a robot includes suggesting “a list of creative tasks that the robot could carry out, such as ‘place the snack onto the countertop.’” That’s not how we’d define creative but whatever floats your imaginative boat…

Visual language models are also used in conjunction with a camera to help robots identify objects around them. It should go without saying that this ability is critical to safety if robots are to be let loose in the home or office.

The DeepMind team said the AutoRT was evaluated over the span of seven months in varied real-world situations. It “safely orchestrated as many as 20 robots simultaneously” and up to 52 unique robots.

Now, with an understanding of how AI works and robotics, we could assume that safe operation of these robots is similar to that of driverless vehicles, based on fairly binary yes/no rules: don’t pour hot coffee if a mug isn’t under the flow, for instance.

Wrong! The safety of Google’s AI-enabled robots is apparently the result of a “robot constitution” written by the company. Inspired by the “Three Laws of Robotics,” a set of rules devised by sci-fi author Isaac Asimov, which include the instruction that “a robot may not injure a human being.”

To reiterate: a “robot constitution,” based on the laws devised by a sci-fi writer.

It’s almost as if no one at Google has ever met a sci-fi writer…

In practice, this constitution is meant to give the LLMs of Google robots “a set of safety-focused prompts to abide by when selecting tasks.”

Moving swiftly on lest we become too scathing, Google has introduced two other advances that will make robots much more efficient than they currently are.

Welcome to the room, SARA

A new system called SARA-RT, short for self-adaptive robust attention for robotic transformers, aims to speed up the decision-making processes involved in the neural networks underlying AI transformer models.

A DeepMind blogpost explained that “while transformers are powerful, they can be limited by computational demands that slow their decision-making.”

There’s also a model called RT-Trajectory, meant to give robots a clearer understanding of what physical actions to take when following a set of instructions. The training dataset includes videos overlaid with 2D sketches of how a robot would perform a task. This is meant to provide “low-level, practical visual hints” in the process.

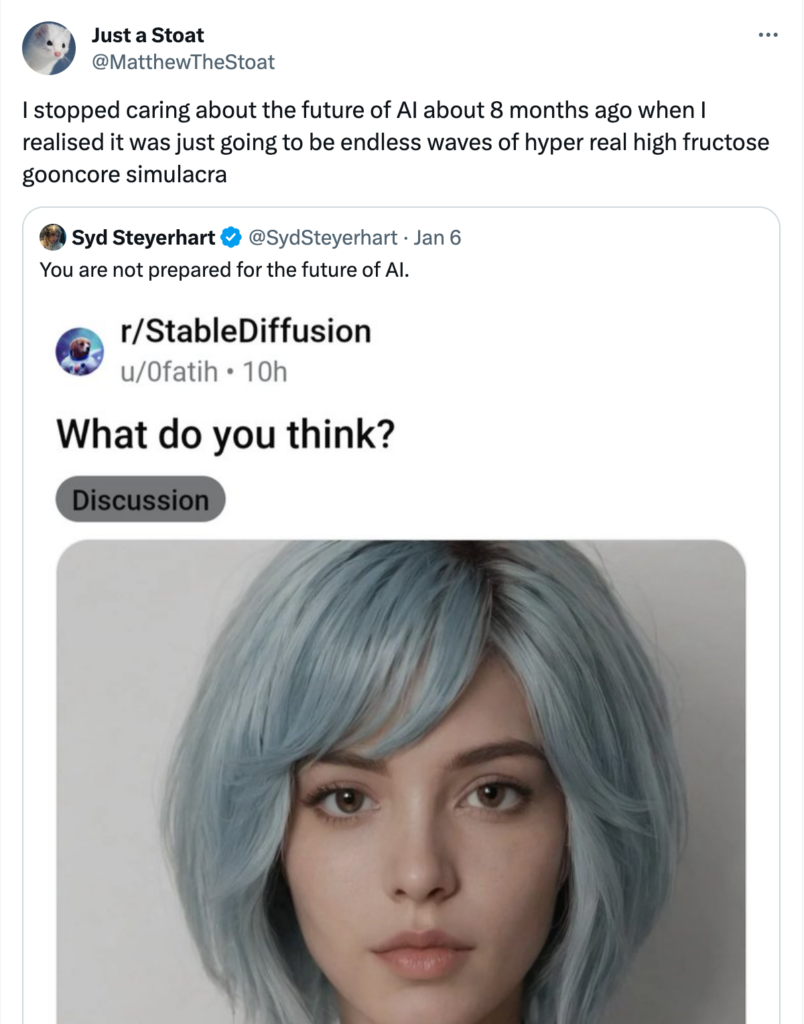

That’s a lot of internet jargon to say “AI’s main use so far has been generating images of women.”

Given the current state of AI-enabled chatbots, we aren’t holding out huge amounts of hope that the AI-powered Google robots will be cleaning up after us for a good while. At least not more so than everyone’s good friend Roomba.