Nvidia is championing the era of AI – and reaping massive rewards

- Nvidia saw a record revenue of US$13.51 billion in its second-quarter earnings, up 88% from Q1 and 101% from a year ago.

- Nvidia’s performance was driven by its data center business, which includes the A100 and H100 AI chips needed to build and run AI applications like ChatGPT.

- Despite a supply crunch on its most powerful AI chip, Nvidia says production will ramp up in th enext year.

Suppose this year is dubbed the year of generative AI. In that case, it is also rightfully the year of Nvidia Corp. The company’s graphics processing units (GPUs) have become the center of the generative AI boom over the last ten months, making it one of the biggest winners of the artificial intelligence revolution. In short, Nvidia has gone from being a gaming graphics specialist to an AI titan in less than a year.

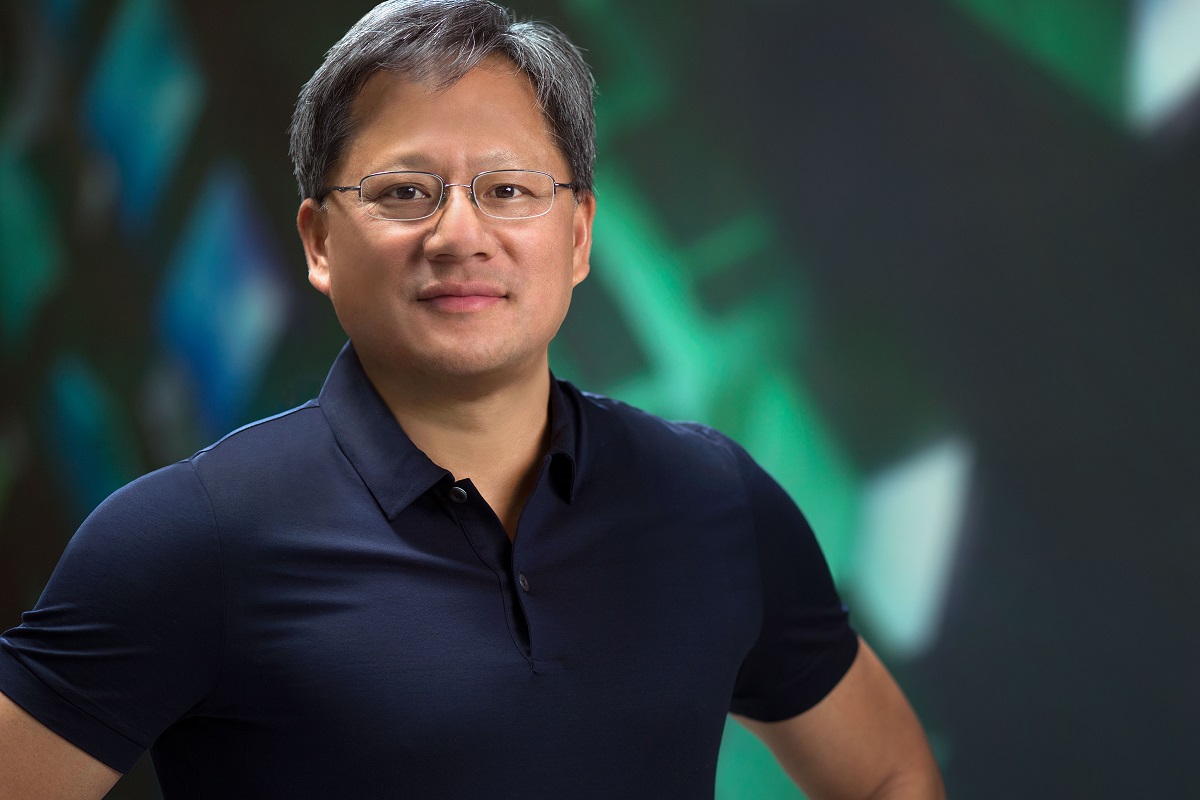

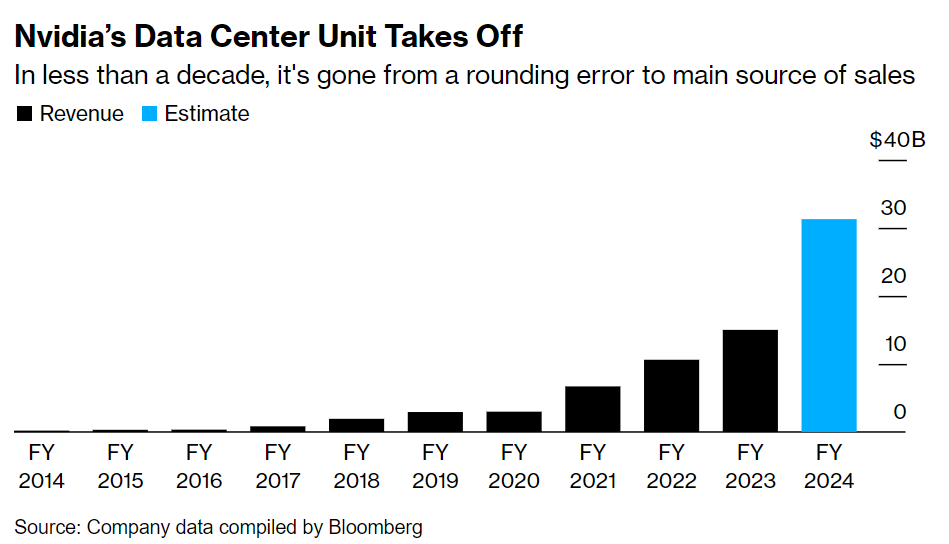

“A new computing era has begun. Companies worldwide are transitioning from general-purpose to accelerated computing and generative AI,” Jensen Huang, founder and CEO of Nvidia, declared. Coming from a company producing 70% of the world’s AI chips, we can take his word for it. Nvidia’s former AI side gig has become its main driver of new business.

Nvidia has hit highs this year thanks to its AI chips.

Primarily, the company’s A100 and H100 AI chips are used to build and run AI applications, notably OpenAI’s ChatGPT. Demand for these compute-intensive applications has increased, and infrastructures are increasingly shifting to support them. So much so that data center sales now constitute most of Nvidia’s revenues, compensating for a slowdown in the gaming department.

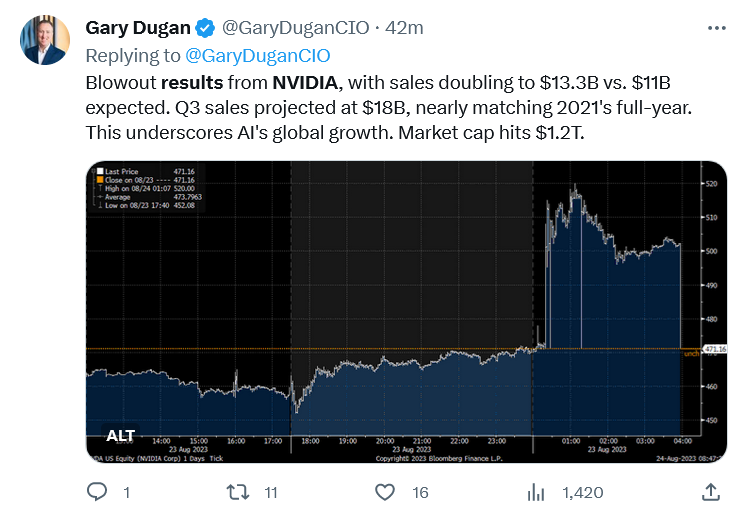

In short, being the largest producer of the chip technology powering generative AI pays, and pays well. The company reported revenue of US$13.51 billion for its second-quarter earnings – up 88% from the first quarter (Q1) and 101% from a year ago. According to data from Bloomberg, analysts had expected revenue to come in at US$11.04 billion.

All of this came amid the massive uptick in Nvidia’s valuations over the past year as investors saw a growing appetite for AI. “NVIDIA GPUs connected by our Mellanox networking and switch technologies and running our CUDA AI software stack make up the computing infrastructure of generative AI,” Huang said in a statement released on August 23, 2023.

Nvidia even reported a record data center revenue of US$10.32 billion, up 141% from Q1 and 171% from a year ago. Huang said during the quarter, major cloud service providers announced massive NVIDIA H100 AI infrastructures. “Leading enterprise IT systems and software providers announced partnerships to bring NVIDIA AI to every industry. The race is on to adopt generative AI,” he claimed.

Nvidia’s data center growth comparison. Source: Bloomberg

For its gaming segment, Nvidia noted that second-quarter revenue came in at US$2.49 billion, an 11% increase from the previous quarter and a 22% uptick from a year ago. “Gaming revenue was up 22% from a year ago and up 11% sequentially, primarily reflecting demand for our GeForce RTX 40 Series GPUs, based on the NVIDIA Ada Lovelace architecture following normalization of channel inventory levels,” Nvidia’s CFO Collette Kress said in her commentary.

Meanwhile, the automotive segment recorded a second-quarter revenue of US$253 million, down 15% from the previous quarter and up 15% from a year ago. “Sales of self-driving platforms primarily drove the year-on-year increase. The sequential decrease primarily reflects lower overall auto demand, particularly in China,” Kress added.

A report from Bloomberg indicated that Nvidia’s quarterly sales overtook those of Intel Corp. for the first time, an achievement on its own terms. “Though Nvidia has had a higher valuation than Intel since 2020, posting more revenue than the chip pioneer shows just how pervasive its products have become,” the report reads.

Nvidia is proving that the AI demand is insatiable – for now

Analysts have estimated that demand for Nvidia’s prized AI chips exceeds supply by at least 50%, which means the current supply imbalance will stay in place for several quarters. But Hang assured investors during the earnings call this week that supply will “substantially increase for the rest of this year and next year.”

The company relies on vendors such as Taiwan Semiconductor Manufacturing Co. and Samsung Electronics Co. for components, and a lack of adequate inventory was seen as a challenge to its growth run. That is perhaps why Nvidia is preparing to triple the production of its US$40,000 processor powering the generative AI revolution.

The Financial Times recently reported that the Silicon Valley chip giant wants to boost production of its hotly pursued H100 processor, named after computer scientist Grace Hopper, with the aim of shipping between 1.5 million and two million units next year, up from the 500,000 target this year.

It’s fair to say Nvidia’s had a decent year on the back of the AI market.

Nvidia’s CFO also addressed the Biden administration’s consideration of new regulations limiting the sales of the GPUs that the company depowered to ensure it didn’t trigger the first China restriction.

“Over the long term, restrictions prohibiting the sale of our data center GPUs to China, if implemented, will result in a permanent loss of an opportunity for the US industry to compete and lead in one of the world’s largest markets,” Kress reiterated.

On the outlook for the third quarter of the current fiscal year, Nvidia forecast revenue of US$16 billion for the third quarter, plus or minus 2%, again coming in far above analysts’ projection of US$12.6 billion.